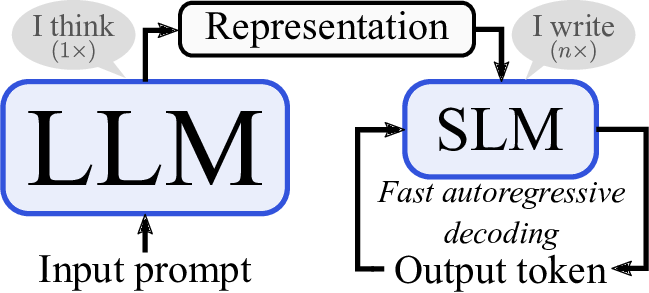

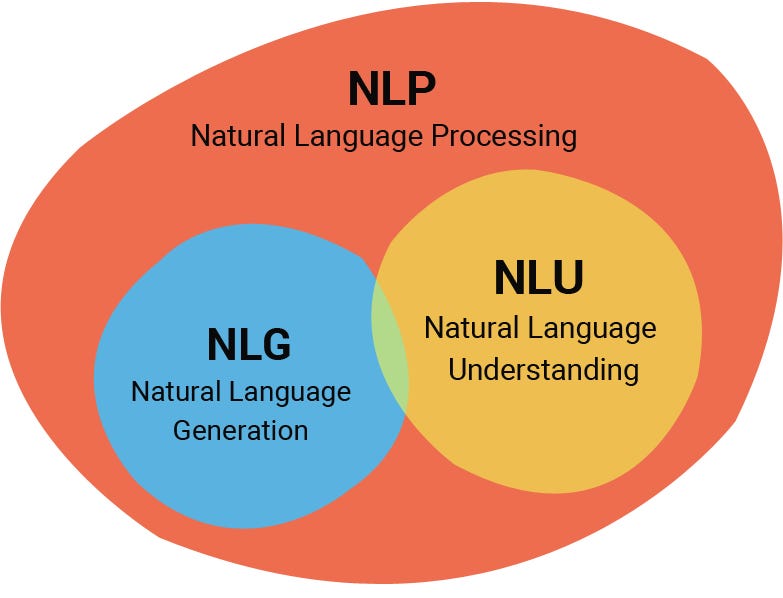

1) Optimise the Type of Language Model

Optimising the language model is probably one of the most preliminary options you need to pay attention to avoid extravagant costs. In many cases, SLMs can do the actually language model job the task requires that can well run on CPUs as opposed to LLMs that either cost massively if we create GPU servers or use closed-source ones such as ChatGPT

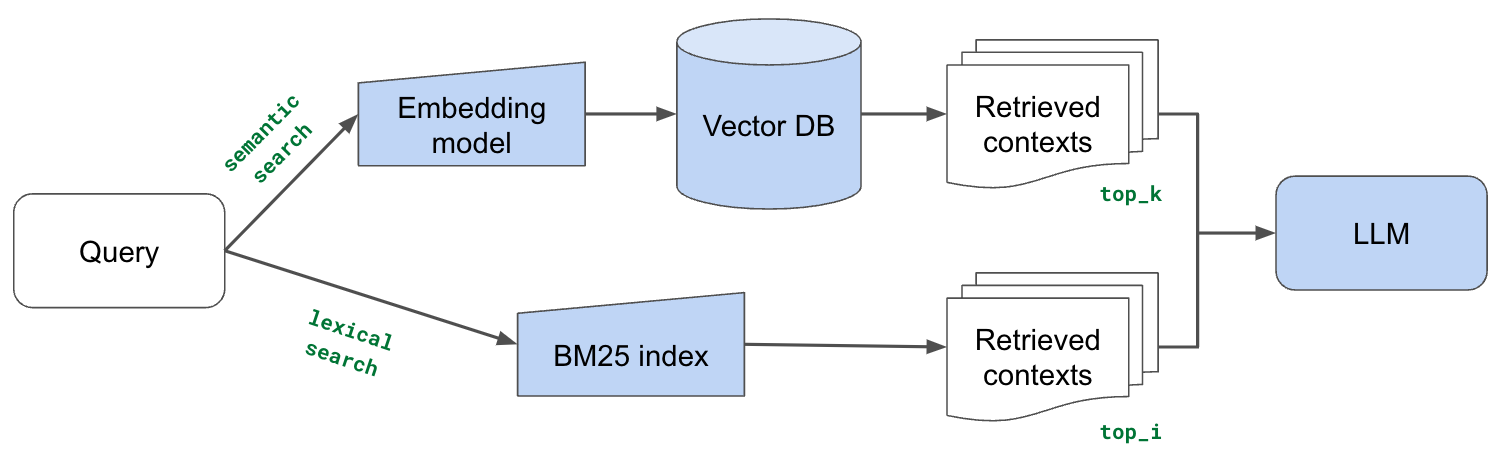

2) Know your need: RAG vs Fine-tune

In many cases, we do not need to host our own GPU to host an LLM model because we wish to fine-tune it. RAG is a great option to integrate our data (vectors) to any LLM without having to own or host our own or even end up hiring a cloud company to host us one. It is the matter of defining your application use cases’ needs.

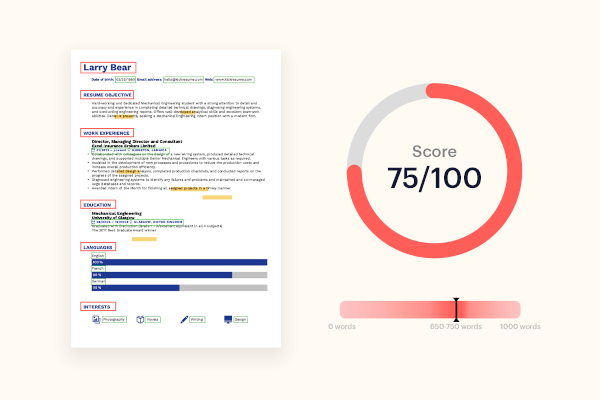

3) Large Language Model Router optimisation

The concept of a large language model router involves using a cascade of models to handle different types of questions. Cheaper models are used first, and if they are unable to provide a satisfactory answer, the question is passed on to a more expensive model. This approach leverages the significant cost difference between models and can result in substantial cost savings.

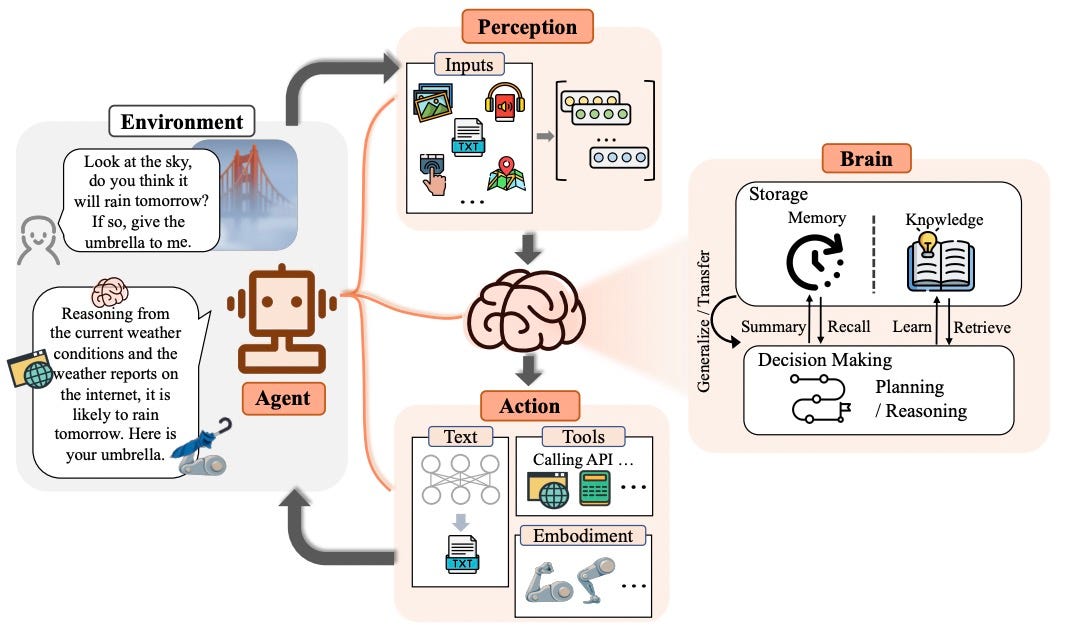

4) Multi-Agent Configuration

Setting up multiple agents, each using a different model is certainly another strategy. The first agent attempts to complete the task using a cheaper model, and if it fails, the next agent is invoked. By using this multi-agent setup, you can achieve similar or even better success rates while significantly reducing costs.

5) LLM Lingua Implementation

A token optimization method introduced by Microsoft that focuses on optimizing the input and output of large language models. By removing unnecessary tokens and words from the input, you can significantly reduce the cost of running the model. This method is particularly effective for tasks such as summarization or answering specific questions based on a transcript. This way we save a lot in processing time.

Comments are closed.